Py4JJavaError: An error occurred while calling z. usr/local/lib/python3.6/site-packages/py4j/protocol.py in get_return_value(answer, gateway_client, target_id, name)ģ18 "An error occurred while calling. > 1133 answer, self.gateway_client, self.target_id, self.name) usr/local/lib/python3.6/site-packages/py4j/java_gateway.py in _call_(self, *args)ġ131 answer = self.gateway_nd_command(command) > 809 port = self.ctx._(self._jrdd.rdd())Ĩ10 return list(_load_from_socket(port, self._jrdd_deserializer)) usr/local/lib/python3.6/site-packages/pyspark/rdd.py in collect(self)Ĩ08 with SCCallSiteSync(ntext) as css:

> 906 vals = self.mapPartitions(func).collect() usr/local/lib/python3.6/site-packages/pyspark/rdd.py in fold(self, zeroValue, op)ĩ04 # zeroValue provided to each partition is unique from the one provided > 1032 return self.mapPartitions(lambda x: ).fold(0, operator.add) usr/local/lib/python3.6/site-packages/pyspark/rdd.py in sum(self) > 1041 return self.mapPartitions(lambda i: ).sum() usr/local/lib/python3.6/site-packages/pyspark/rdd.py in count(self) usr/local/lib/python3.6/site-packages/pyspark/rdd.py in takeSample(self, withReplacement, num, seed) Py4JJavaError Traceback (most recent call last) (For background, the "python" command was pointing to Python 2, but I created a Python 3 notebook, so the workers were using the default Python command pointed to by "python" (version 2) while the notebook, running the PySpark master, was using Python version 3.) When I ran the above test, I saw this error that the workers were running Python 2 and the master was running Python 3: Start up Jupyter and create a new notebook: You may also need to install pyspark using pip:

To use PySpark through a Jupyter notebook, instead of through the command line, first make sure your Jupyter is up to date: Test that it's ok by checking if the sc variable is holding a Spark context: is not enabled so recording the schema version 1.2.0ġ7/09/26 17:53:16 WARN ObjectStore: Failed to get database default, returning NoSuchObjectExceptionġ7/09/26 17:53:17 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException using builtin-java classes where applicableġ7/09/26 17:53:16 WARN ObjectStore: Version information not found in metastore. For SparkR, use setLogLevel(newLevel).ġ7/09/26 17:53:09 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. To adjust logging level use sc.setLogLevel(newLevel). Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties Type "help", "copyright", "credits" or "license" for more information. This should look a bit like Python, but with a Spark splash message: Test it out by running the pyspark command. Now set the $SPARK_HOME environment variable to wherever your Spark lives:Įxport SPARK_HOME="/path/to/unzipped/spark-2.2" Now unzip the Spark source, enter the directory, and run:Įnsure Spark was built correctly by running this command from the same directory: $ sudo apt-key adv -keyserver hkp://:80 -recv 642AC823 $ echo "deb /" | sudo tee -a /etc/apt//sbt.list

Now get the Scala build tool into aptitude (see ): I was still getting problems importing pyspark, so I also ended up running a

Brew install apache spark 2.1 how to#

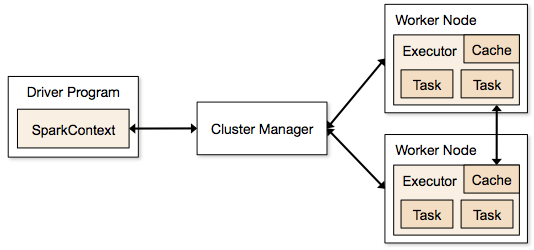

Nice explanation of how to set it up with either a standalone (single node) or Mesos cluster in the PySpark notebook image's README: īasically, here are the first few lines of a standalone notebook:Įnsure you have the following software installed: This Docker image is provided courtesy of the Jupyter project on Github: This enables you to connect to a Mesos-managed cluster and use compute resources on that cluster. This comes bundled with Apache Mesos, which is a cluster resource management framework. Use the Jupyter PySpark notebook Docker container:

0 kommentar(er)

0 kommentar(er)